Prospect CATO

$19.99

"Boss, delegate this to me."

Forward this email. Claim your workflows. Track who reads.

- ✓ Register 5 prospective workflows

- ✓ Track reading delegation

- ✓ Observer standing at Constitutional Convention

- ✓ Path to full CATO certification

Full CATO Certification available after reading • $1,995

What Each Seat Includes

Share the book link with your team. No purchase required.

"Sarah, read this manual. When you finish, I'll sponsor your CATO certification."

Reward readers with official CATO credentials. Company investment.

- • Badge progression: 📖→📚→🛡️→👑

- • Hosted credential for LinkedIn & email

- • Gap Analysis reports for the board

$1,995 per CATO — one certified officer per AI workflow

The audit that lets you tell the board:

"We will do better next time. Here's the structural fix."

- • Verifiable score, not a promise

- • PDF report for stakeholders

- • Maps gaps before regulators find them

"Boss, delegate this to me."

Claim workflows • Delegate reading

After reading • $1,995

Not the decision maker? Forward this to your CEO. They delegate, you advance.

You're Liable for AI.

When it breaks—and it will—they'll ask what you did to prevent it.

You knew. You had a chance. What did you do?

SCALING IS NOT SAFETY

🧊 You Are Driving on Black Ice

Your AI agents have no idea when they're wrong.

No brakes. No steering wheel. No chains.

At any speed, that's indefensible negligence.

Black ice is invisible. You don't know you're on it until you're spinning.

Your AI sounds confident. It has no idea when it's wrong.

Happening Right Now

The Pentagon gave Anthropic a deadline to strip their safety constraints. The most capable AI company is being forced to choose between ethics and survival.

This isn't hypothetical. The floor friction crisis is playing out in real-time.

→ Read: Why Ethics Is Floor Friction, Not Ceiling TargetBillions in AI investment. Trickle in deployment.

Companies are terrified to deploy because "Naked Agents" degrade brand equity.

Saving $3,000/month on a chatbot isn't worth $100,000 in reputation damage.

You're right not to trust AI. That's the correct instinct.

→ Here's the insurance policyWe're not selling you AI. We agree it's dangerous.

We're selling you the Insurance Policy so you can finally use it.

The FIM Seat ensures your agents adhere to your brand geometry.

We're not just warning you—we're handing you the steering wheel.

🧠 Why This Works (The Neuroscience)

"Neurons that fire together, wire together."

— Hebbian Learning, the foundation of how brains actually work

The Physics Problem

When physics touches a computer, we call it noise and rerun the computation.

Cosmic rays flip bits? Rerun. Thermal noise corrupts memory? Rerun. Hardware degrades? Replace and rerun.

Digital systems treat physics as contamination to filter out—not ground to anchor into.

LLMs (Ungrounded)

Pattern matching without structure. Outputs have no geometric coordinates. When they're wrong, there's nothing to fix.

FIM (Grounded)

Outputs locked to coordinate space. Fire together, ground together. When they're wrong, you can find and fix the missing link.

This isn't wishful thinking. This is how memory works. FIM is the first system to apply it to AI governance.

When something goes wrong—and it will—can you promise it won't happen again?

That's not a management question. That's a physics question.

Without FIM: "It's random. We hope it doesn't happen again."

With FIM: "We identified the missing coordinate. Here's the structural fix. It won't happen again."

You need a Gap Analysis — the audit that lets you tell the board:

"We will do better next time. Here's how."

Designate someone from your team as your Certified AI Trust Officer (CATO).

One CATO per seat. They conduct assessments. You get verifiable proof.

AI drifts. FIM steers.

Every AI hallucinates sometimes. Wrong 20-40% of the time, with perfect confidence.

FIM ensures your agents stay on YOUR brand geometry.

Ask yourself:

How many agentic workflows do you run?

(Customer service bots, sales copilots, code assistants, research agents...)

What happens when one of them confidently says something wrong to a customer?

(Get them to say: "We lose the customer. Maybe get sued.")

What would it be worth to have a real path to victory on this?

(Not a promise. Proof you can point to.)

$1,995 per CATO. That's the math.

Less than one hour of legal consultation. Less than one angry customer churning.

A rounding error in your AI budget.

If You're Asking These Questions, This Is For You

CEO / Founders (especially mid-market)

"AI is my responsibility. I can't afford a governance team—how do I delegate this liability?"

Fortune 500s have departments for this. You have CATO—build an officer core internally, not a headcount line item.

CISO / Security Leaders

"How do I prove our AI isn't a liability?"

Gap Analysis gives you documented proof for the board.

Compliance / Risk Officers

"EU AI Act fines are €35M. Are we ready?"

Your CATO maps your gaps before regulators find them.

CIO / Chief AI Officers

"We can't promise it'll do better next time."

Without a Gap Analysis, you don't know what went wrong.

Board Members / Executives

"We need AI governance. Where do we start?"

Train your own CATO. Internal expertise, external validation.

See industry leaders facing this challenge →

Insurance & Reinsurance

- • Joachim Wenning (Munich Re) — €35M EU AI Act exposure

- • Oliver Bäte (Allianz) — €90B under AI management

- • Andrew Witty (UnitedHealth) — 150M lives affected

Financial Services

- • Jamie Dimon (JPMorgan) — $3.7T in AI-assisted decisions

- • Larry Fink (BlackRock) — $10T AUM AI allocation

- • Jane Fraser (Citi) — AI lending discrimination risk

Healthcare

- • Dr. Tom Mihaljevic (Cleveland Clinic) — AI diagnosis liability

- • Gail Boudreaux (Elevance/Anthem) — Prior auth AI

Tech & AI

- • Satya Nadella (Microsoft) — Azure AI attribution

- • Dario Amodei (Anthropic) — Constitutional AI limits

Not a Fortune 500? That's the point.

Mid-market CEOs are personally exposed. You can't hire a governance department—but you can certify your sharpest people as CATOs. Delegate the liability to an officer core you built, not a team you couldn't afford.

The Path: From Outsider to Standard-Setter

Those who understand the system shape it. Those who don't get shaped by it.

Read the Book

Your CATO candidate starts here. The book is free. Understanding it is the entry requirement.

Start reading →Become the CATO

You're a CATO prospect until the company buys—but you're still a CATO. Build an officer core, not just one or two people.

📖→📚→🎓→👑Shape the Standard

Your CATO conducts the Gap Analysis. Your representation influences how the standard evolves.

Write the rules, don't just follow themNot ready to buy seats? Sign the Snowbird Declaration or Endorse the Standard to show support.

Recommended Packages

$19.99 per workflow seat + CATO certifications for your officer core

- • 10 workflow seats @ $19.99

- • 1 CATO certification

- • Observer governance tier

Budget $2.2K for AI governance

- • 50 workflow seats @ $19.99

- • 3 CATO certifications

- • Contributor governance tier

Budget $7K for AI governance

- • 100 workflow seats @ $19.99

- • 5 CATO certifications

- • Voting Member tier

Budget $12K for AI governance

- • 200 workflow seats @ $19.99

- • 10 CATO certifications

- • Founding Council tier

Budget $24K for AI governance

Custom packages available. Contact us for 500+ seat deployments.

$1,995 Per CATO

One seat per AI agent you plan to run. That's the recommendation.

Not enforced—just guidance. If you believe in running many agents, or want a hand in our success, buy more.

Governance influence scales with your stake:

CATO Certification is Separate

Anyone can study for free by reading the book. Publishing a CATO credential is a separate transaction— available once someone qualifies.

Why you won't regret this: You're not buying a product—you're buying a seat at the table before the rules are written.

Why you might regret waiting: In 2 years, AI governance will be mandatory. The question is whether you helped write it, or scrambled to comply with what others wrote.

This is a standards consortium membership, not an investment or security.

Participation provides input into technical standards development — no financial returns implied.

Not ready to commit? Show your support another way:

ThetaDriven

IAM FIM

Facial Recognition for Data

Position, not proximity.

Like faces: you read the shape, not vector similarity.

We built IAM FIM because vector DBs find what is near. We show what is where.

12 node identities. Unlimited depth. O(1) decisions.

One geometric key for humans AND AI agents - and we want to build it with you.

10 μs

Decision timeO(1)

Complexity∞

ScaleBid for standing by buying seats for your organization.

More seats = more influence in shaping the standard.

$1,995/seat • Organizations with highest seat counts get priority voice when we convene.

This is for you if...

?

You worry about the liabilities of agentic AI

?

You want to be able to say "we will do better next time" — but you felt it, the splinter in your mind

?

You know we have no clue what's going on in the black box (and if you think you can see inside, that's likely... a hallucination)

?

You've heard the consensus: we can't fix AI reasoning any more than we can fix a car engine with the hood welded shut

What if identity and access wasn't a policy you enforce — but a shape you recognize?

You recognize a face before you read the nametag.

Your brain doesn't search a database of faces. It reads the shape. Instant recognition. We do the same thing for data permissions.

Instant

You know who it is

No lookup required

→

Same principleInstant

Agent knows what it can access

No server call required

The precision?

More unique addresses than seconds since the Big Bang.

If you randomly guessed one identity per second since the universe began, you still wouldn't find it.

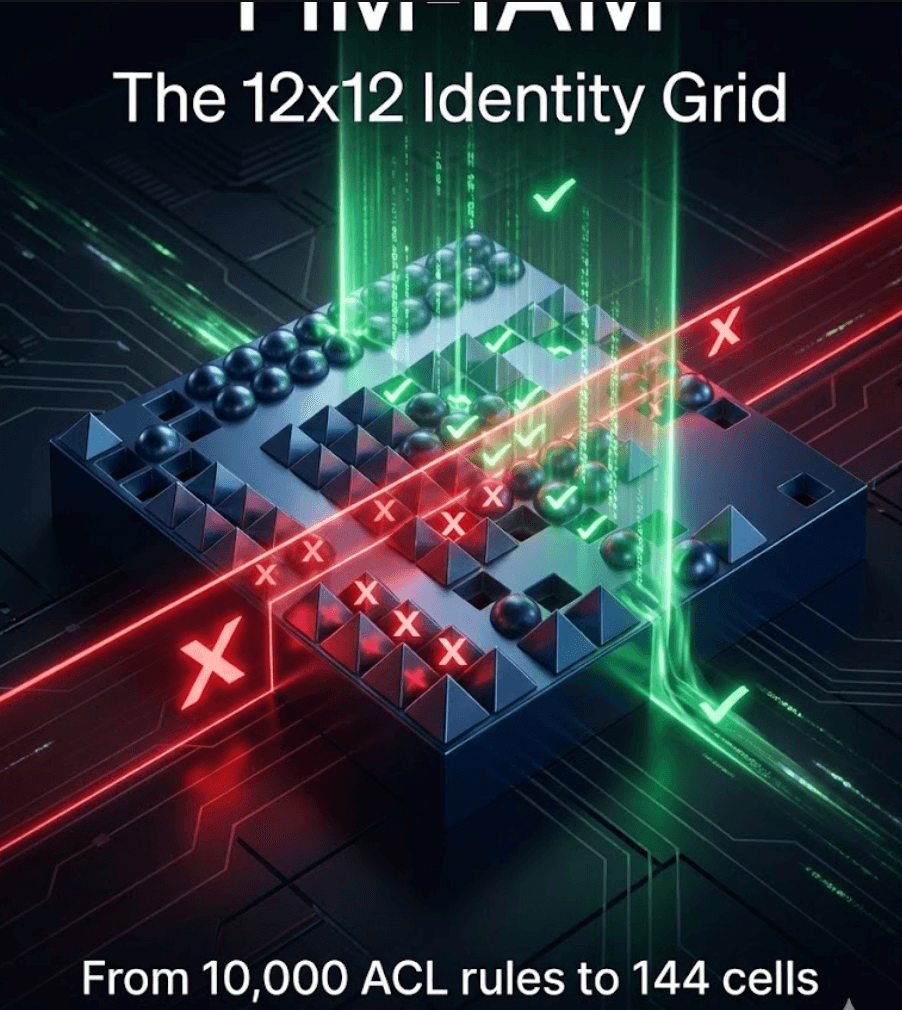

The paper explains how we achieve this with a 12×12 grid.

IAM FIM Is the Implementation.

The Book Is the Why.

Tesseract Physics: Fire Together, Ground Together explains why S=P=H (Semantic = Physical = Hardware) is not metaphor—it's physics. Why cache misses, AI drift, and identity failures share one root cause. Why position-based verification beats probabilistic matching.

Read the book first to understand the Unity Principle. Then implement it with IAM FIM.

Theory → Implementation. Understanding → Action.

This Is Not a Playground

The AI frontier is a hostile wilderness. 90% of travelers die. Only the Grounded survive.

90%

Insecure Code

The wilderness wins61%

Functional Only

Vibe Coding eraP=1

Grounded Certainty

Pioneer survivesThe Tourist (Vibe Coding)

Visits the frontier for the "vibe." Takes some photos. Goes home.

• Relies on luck (probability)

• Confidence scores: "80% likely"

• When the storm hits, they freeze

The Pioneer (FIM Physics)

Goes to the frontier to build civilization. Knows entropy is trying to kill them.

• Relies on geometry (The Grid)

• Binary existence: P=1 (it IS or IS NOT)

• Laying the rails, not riding the horses

S = P = H (The Unity Principle)

Symbol = Physics = Hardware (i.e., closer to how humans actually work)

When these three are unified, the system cannot drift. Not because it chooses not to, but because the geometry forbids the motion.

This is not a promise. This is physics.

Your IAM was built for

humans clicking buttons.

AI agents move 1000x faster.

If you're deploying AI agents, you already feel this:

Every time an agent needs to check permission, it calls a server. 400 milliseconds.

At 100 permission checks per minute, your agents spend 67% of their time waiting.

That's not a bottleneck. That's a competitive extinction event.

What if the agent already knew?

Like you recognize a face without checking a database, what if your agent could verify permission without calling a server?

That's IAM FIM. The agent carries a geometric identity. Permissions are checked locally in 10 microseconds instead of 400 milliseconds.

40,000x faster. No infrastructure changes.

"How can a simple grid handle enterprise scale?"

Great question. Your bank account number is 17 digits - it doesn't contain your money. It routes to the vault.

FIM works the same way. The 12×12 grid doesn't store your permissions. It addresses them.

The result? More unique identity addresses than seconds since the Big Bang.

The paper walks through exactly how we achieve this. It's elegant once you see it.

Why Traditional ACLs Break

Watch the race: 40 seconds vs 10 microseconds

Traditional ACL/RBAC

O(n^2) to O(n^3) per decision

IAM FIM Geometric

O(1) - Always 144 comparisons

AI agents make hundreds of permission decisions per minute. Traditional ACLs require 400ms per check. At 100 checks per minute, that is 40 seconds of waiting every minute. FIM does it in 1 millisecond total.

While Your Agents Wait for Permission...

Your competitor with IAM FIM is already done.

Your Current IAM (Traditional ACL)

Permission checks per agent

conservative estimate100/min

Latency per check

database round-trip400ms

Wait time per minute

67% of time waiting40 sec

Per 8-hour day

wasted on permission checks5.3 hours

With 10 agents running

53 hours/day

of cumulative agent wait timeCompetitor with IAM FIM

Permission checks per agent

same workload100/min

Latency per check

local grid comparison10μs

Wait time per minute

0.0017% of time waiting1ms

Per 8-hour day

total permission overhead0.5 sec

With 10 agents running

5 seconds/day

of cumulative permission overheadThe Math That Matters

Every minute your agents spend waiting for permission checks is a minute your competitor's agents are working.

At scale, this compounds. 10 agents x 5.3 hours/day = 53 hours of lost productivity daily.

That is not a rounding error. That is a competitive extinction event.

40,000x

slower per decision

5.3 hrs

wasted per agent/day

$0

infrastructure change

We built IAM FIM so you don't have to give competitors a 40,000x advantage. Ready to work with us?

Read the Shape at a Glance

Three grids tell the complete story: what the agent can do, what the resource requires, and whether they match.

AI Agent Capabilities

Hot = can act | Cold = blockedResource Requirements

Sparse = precise requirementsOverlay Result

Green = covered | GRANTED in 10 μsWhy This Is Different (Not Just Better)

Asymmetry IS Policy

We encode policy in geometry: Cell(Team,Global) is not equal to Cell(Global,Team). Publishing upward requires approval authority; broadcasting downward requires delegation authority.

Meta-Access IS Visible

We separate operational access from classification authority. Writing TO a sensitivity level differs from writing AT that level. Your compliance team sees the shape, not just pass/fail.

Direction IS Audit

We capture escalation vectors in the grid itself. Your auditors see the shape of the action - where permission flowed, not just whether it was granted.

Agents IS Portable

We designed FIM so agents carry their grid - no server dependency. Sub-agents inherit via bitwise AND. We built the only IAM model that scales with you.

The "Click" Test

Traditional IAM: Reads the Label

Traditional IAM reads the brand name on the key ("User: Elias"), looks it up in a book, and hopes the book is up to date.

1. Parse the label

2. Query the database

3. Traverse role hierarchy

4. Evaluate policies

5. Cross-reference tags

6. Hope nothing changed

This is probabilistic. It guesses.

IAM FIM: Checks the Shape

When you insert a key into a deadbolt, the mechanism doesn't read the brand name. It checks the shape. If the geometry aligns, the lock turns instantly.

1. Agent carries 144-cell grid

2. Resource declares requirements

3. Single local comparison

4. Binary result: Click or no click

This is deterministic. It knows.

We built a physical locking mechanism for data.

The mechanical "click" is the authorization. It is binary. 1 or 0.

IAM FIM is the geometry that makes the key turn. We want to hand you that key.

Grounding Is Not a Leash. It Is a Circuit.

Most people think grounding is "tying the AI down." That is one-way. That is slavery, not grounding. True Grounding flows two ways.

Symbol → Physics

"The Map Controls the Territory."

When the symbol (the Code/Permission) is grounded, the Agent cannot violate physics. The Geometry forbids the motion.

Without Grounding: The Agent promises to be safe. (Vibing)

With Grounding: The Agent is physically incapable of being unsafe.

Pioneer Value: SAFETY

Physics → Symbol

"The Territory Verifies the Map."

When physical reality changes, the Symbol updates instantly. They are the same thing.

Without Grounding: Dashboard says "All Good," engine is on fire. (Drift)

With Grounding: The symbol IS the engine state.

Pioneer Value: CERTAINTY

A train can go 300mph because it is grounded to the rails.

A car on ice (Vibing) has to go 10mph to stay alive.

Questions?

Humans and bots both need a grip on reality. The book explains why. The CRM trains your reps. IAM FIM grounds your agents.

We also built the first CRM that's a flight simulator — it coaches you through the sale, not just tracks it.

Contact UsWe're Writing the Standard. Join the Council.

The Problem: Autonomous agents are making decisions without semantic grounding. When an AI agent acts, there's no way to verify it "meant" what it did. This is the liability gap that caused the Air Canada chatbot ruling, the Waymo school bus recalls, and countless internal incidents you'll never read about.

The Solution: We're not patching the software after the crash. We're building the Grounding Standard - the identity architecture that makes agent authorization deterministic, not probabilistic.

Technical Validation: The framework has passed peer review. Now we're selecting the first companies to implement it operationally. The 10 organizations in this room will co-author the standard. Everyone else will implement your rules.

EU AI Act (August 2026) requires documented risk management for high-risk AI systems. Be in the room when "compliant" gets defined - or implement someone else's definition later.

The Leadership Brief

A shareable document to take to your leadership. Not tools. Ammunition.

The Case for Grounded AI - Why P=1 certainty matters

The Liability Math - Air Canada, Waymo, EU AI Act evidence

Assessment Checklist - Evaluate your org's agent exposure

Vendor Questions - What to ask your AI providers

Each seat = one copy of the brief + your org's stake in the convention.

Lead Co + Observer Cos

🏆 Lead Co

Highest seat count when we convene

• Biggest leap of faith = most influence

• Becomes the Reference Implementation

• Your use case = the proof it works

• Shape what everyone else implements

👁️ Observer Cos

All other orgs with seats

• Watch the Lead Co pilot

• Audit the methodology in action

• Co-author the findings

• Voice proportional to seat count

⚠️ If you don't buy seats, someone else becomes Lead Co. They shape the standard. You implement their rules.

Built for Agentic Implementors

Anthropic / Claude integrators

MCP tool permissions at scaleOpenAI / GPT integrators

Function calling authorizationLangChain / LlamaIndex teams

Agent chain permission inheritanceEnterprise AI security

Replacing RBAC for AI workloadsPlatform architects

Multi-tenant agent isolationHow We Work Together:

Seats proportional to your IAM responsibility → shows us you're ready

10+ seats → we invite you to the event (qualified orgs only)

Event → we discuss licensing together, not training

Pilot partnership → we deploy IAM FIM with you, hands-on

We want partners who get it. Your seat count tells us you're one of them.

IAM FIM: The 12x12 Identity Grid

Show commitment. Take it to leadership.

Leadership brief (shareable)

The case for grounded AI

Your org's early position

Convention standing

How many seats?

x $1,995 each

1 seat x $1,995

$19.99

Questions?

Want to understand WHY this works?

The book explains the physics. IAM FIM implements it.

Enterprise licensing or 100+ seats? Contact elias@thetadriven.com

The Constitutional Convention for Agentic AI

Agentic systems act autonomously. The question isn't if they drift—it's how we ground them.

We're writing the standard for symbol grounding in autonomous systems.

LATEST: 3-Tier Grounding Protocol Proven

We've implemented the core patent claim: Permission = Alignment. The math works. Anti-drift is now physically enforced, not statistically hoped.

"LLMs are a dead end for superintelligence... you need to understand how the physical world works." — Yann LeCun, Jan 2026. Our Tier 0 uses local LLM (Ollama) precisely because we agree: it drifts. That's why we built the Grounding Chain.

✅ Proof: The Grounding Loop Works

![Grounding Loop Hello World - LLM categorizes text to Cell [6,9], human confirms](/images/grounding-loop-proof.png)

This is the "Hello World" of agentic grounding.

Captured: Raw text the system observed — "lets plan around these, avoid the gotyas..."

Cell [6, 9]: Row 6 (weeks-scale work) × Column 9 (daily urgency). You can feel the time delta in both dimensions—positions become intuitively meaningful when subdivided this way.

✓ Correct: Human grounds the mapping → becomes Ground Truth for future agents.

✗ Wrong: Triggers Escalation → breaks Echo Chamber → resets grounding age.

One button click. That's the entire patent claim working.

🚨 The Grounding Crisis

Agentic AI systems act on symbols. When symbols drift from reality, actions become misaligned. Current permission systems check access, not alignment. They can't detect drift until it's too late.

⚖️ Task-Matched Autonomy

Free agency requires knowing which agent state fits which task. Not all actions need the same grounding depth. We're building the math for agentic task matching.

🏛️ The Open Standard

Big Tech will build closed grounding systems. We're building the open one. Founding members shape how agentic systems verify alignment. The convention is writing the spec now.

For Organizations: Secure Your Founding Membership

Multi-seat purchases include priority access to governance discussions, early API access, and influence on the roadmap.

10+ seats unlocks Constitutional Convention participation.

Ways to Contribute to the Standard

🎯 What Could Go Wrong (Our Known Blind Spots)

Intellectual honesty requires acknowledging what we might not have thought of. These are the failure modes we're actively designing against.

1. The Boiling Frog Attack

Adversarial agents could maintain "Green" confidence while taking microscopic steps toward malicious goals—the vector changes too slowly to trigger escalation.

Fix: Periodic grounding against the Canonical Shortrank Map, not just previous state. The (c/t)^n formula means hiding micro-drift requires corrupting orthogonal witnesses. With 144 cells maintaining "equal-size holes" (statistically independent dimensions), coordinated deception becomes orders of magnitude harder than honest drift.

2. The Compliance Nightmare

Auditors love static lists. "It depended on entropy at 2:03 PM" is mathematically sound but legally terrifying.

Fix: Black Box Recorder freezes exact state for every destructive action.

3. Rubber Stamp Fatigue

Too many escalations → Human enters "Click-Through Mode" → Anchor becomes ungrounded.

Fix: "Boy Who Cried Wolf" metric treats fast-approving humans as drifting.

4. Summary Deception

"Cleaning up disk space" might hide "deleting production_db." Humans approve summaries, not actions.

Fix: Semantic Diffing red-lines irreversible/high-stakes keywords.

5. Offline Fragility

Cloud API outage: fail open (dangerous) or fail closed (useless)?

Fix: Explicit Degraded Mode—skip Tier 1, go straight to Human.

These aren't theoretical. They're the agenda items for the Constitutional Convention. Your input shapes how we solve them.

🏛️ Convention Governance Structure

1

Lead

Facilitates, breaks ties≤5

Observers

Earned through contribution100

Voices

Founding organizationsVoice = shown commitment. Observer seats are limited and earned. Lead rotates annually.

The first 100 founding organizations shape the standard. After that, you're adopting someone else's rules.

IAM FIM by ThetaDriven